Like many geeks, I enjoy unique puzzles, especially when the solution is mathematically interesting. (Puzzles with nicely-defined moves / positions / candidate solutions, and which require more thought than dexterity are the best.) I have a small collection of interesting physical puzzles (which might make an interesting article someday.)

A while ago, my wife found a wooden puzzle cube. Figuring that it was inexpensive, somewhat educational, more or less nontoxic, and might keep me from bothering her for a few hours while she studied for her Pharmacy exams, she bought it for me. (Guys never really do grow up…)

The premise is pretty simple. Twenty-seven wooden blocks are joined together by elastic thread. At sixteen points in the chain, the thread makes a right angle. When twisted into the right configuration, the blocks can be formed into a 3x3x3 cube. This is more difficult than it seems: there are some four billion possible combinations, most of which seem to be impossible, since the blocks would overlap. At least one solution must work, since the cube was initially in solved form when bought.

I played with the cube for a while, eventually solving it again, and decided it would make an interesting problem for a genetic algorithm.

Two things are needed to make a genetic algorithm (GA) work: a way to express the solution as a bitstream (or string using a fixed alphabet of characters), and a method of evaluating a candidate solution (bitstream or string). With these two pieces, the genetic operators of crossover and mutation (with possible others added) can be repeatedly applied to a population of solutions. Artificial selection using the fitness function is used to choose the next population.

Since at each point in the cube, four directions are possible, I settled on expressing candidate solutions as strings of characters — either N, S, E, or W. I arbitrarily chose “North” to represent “up” (positive Y direction) wherever possible and “away” (positive Z direction) when “up” was not possible. Every string of these directions would represent a valid — if not viable — candidate solution. (The distinction is central to how Genetic Algorithms work; each candidate solution, even completely randomly-generated ones, must be valid. That is, it must be a string of the right length, drawn from the acceptable alphabet — in this case, N, S, E, or W. The solution may make no sense at all — with blocks overlapping multiple times and still not fitting into the desired 3x3x3 square — but it will be able to be evaluated, since any possible solution is “gramatically” correct.)

Candidate solutions are graded on the size of the rectangular prism “bounding box” needed to contain the resulting configuration, with smaller numbers being better. (The ideal 3x3x3 nonoverlapping solution would have a fitness of 27.) The problem of overlap was handled by penalizing solutions which overlapped — in this case, ten blocks per overlap was chosen as an arbitrary penalty.)

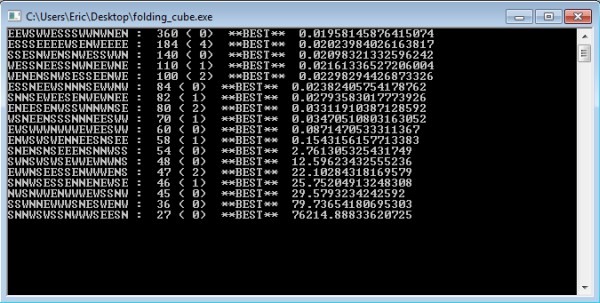

I first wrote a fitness evaluation routine, and tested it on a known-good solution. It returned the expected value of 27, so I set up a naive random-string-generator algorithm which simply tried random strings, keeping the best-so-far solution. It came up with reasonably good guesses in a short amount of time, but took 21.17 hours to come up with an optimal, fitness-27 solution: SNNESESSNWWWSEESN.

The results for a random search. After more than 21 hours, it finally found it...

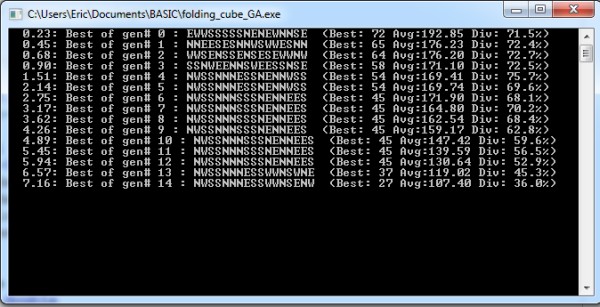

Next, I tried a genetic algorithm approach. An initial population of sequence strings was generated and evaluated. (The fitness function used was the same as before — the size of the bounding box needed to contain the result, plus ten times the number of overlapping cubes.) The fitness results were used to select individuals to be used to create the next generation. Uniform crossover and uniform random mutation were applied. It took a bit of tweaking to find optimal values (more on that later), but one GA run found a correct solution (although a different solution than the random one: SSNWSWSNNWEESEWSS) in 20.45 seconds (the 40th generation with a population size of 1000). A slightly different run found a third optimal solution — NWSSNNNESSWWNSENW — in 7.16 seconds (14 generations).

Optimal solution found in 14 generations (with population size 1,000). Only 15,000 candidate solutions (out of over four billion) were searched!

Those results represent GA runs where an optimal solution is found, so they’re a bit optimistic. Using the GA settings that I’ve come across so far, I’ve found that the GA tends to either find an optimal solution relatively quickly, or doesn’t find one within quite some time (if ever).

I’m currently working towards characterizing the effects of varying the mutation rate and fitness / selection criteria on the overall probability of the GA finding an optimal solution. For now, though, restarting randomized runs of the GA after a hundred or so generations pass without a solution seems to do the trick; a solution is usually found sometime in the first five or ten minutes — still a speedup of over a hundred times compared to the blind random search procedure.

Myth confirmed: Darwinian evolution does work — at least in silico.