Once again, Scott Adams has it right:

I never really understood most of the methodologies behind “software engineering.” Yes, a definite code-review process and overall plan is certainly needed for projects of any reasonable size — but to me, the main takeaway from the “Software Engineering” course I took was that there is a *lot* of management going on, and it doesn’t seem to be especially effective. We clearly still have not eliminated software bugs, and yet we were told in the course that twenty or thirty lines of code per day is optimistic, for large projects.

This seems ridiculously inefficient to me. If a project is broken down into manageable modules, coding should scale reasonably well across project sizes and across varying numbers of coders working on the project (assuming that one coder works on one module when writing.)

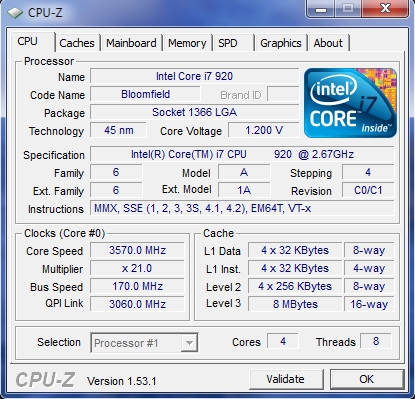

What seems even more insane is the idea of “pair programming” — where two programmers work in close cooperation as a team, with one computer. One programmer “drives” (writing the code) while the other “rides shotgun” and apparently just watches the first one code — presumably to point out mistakes as they are made and to provide running commentary. How can this possibly be more efficient than providing each programmer with his or her own computer, even if it’s a ten-year-old eMachines piece of crap? A cheap new PC costs less than $500 these days — less than a week’s salary for a programmer.

How about this for a methodology:

* The software group meets to nail down specifications for the project, decide on a language or languages, and writes these specifications down. All design is high-level at this point, and the focus is on whether this can be done in the time allowed and within the proposed budget. The client and lead developer share responsibility for the final version of this document. Input is welcomed from all developers, but the client and lead developer sign off on the master plan. (This part should take no more than a day for most projects, and probably an hour or two for many. For something huge like a new version of an OS, perhaps a few weeks at most.)

* Once the master plan is in place, the developers meet to break the coding down into modules. Each module is described in a module contract document, specifying what language or languages will be used to run it, maximum resources allowed, the deadline for module completion, and (most importantly) what values the module will be passed and what values it will pass to other modules, along with parameters. (For instance, a “square root” module would be specified to accept a single parameter of type double and return a single parameter also of type double.) Any possible error conditions should also be enumerated, along with how to handle them. For the square root function, if it is passed a negative number as input, should it return a zero, somehow have the ability to return a complex number, or call an error routine?

* Once the contract for each module is written, it is assigned to a team, ideally comprising one programmer. This programmer (or team led by one individual responsible for the module) writes the code and ensures that it meets the specifications.

* Once the module is written, it is sent to one or more other programmers (perhaps anonymously) for testing, along with its specification document. They note its performance on all test cases — or choose a representative set of test cases that they feel has the best chance of turning up any errors.

* The “main” program is handled as a module like any other, with its own specification contract document.

This methodology, I think, would help cut through a lot of the red tape associated with “software engineering” while providing a way to ensure that the code produced is sound. It also avoids the “extreme programming” idea of having programmers working in teams, which to me sounds a lot like trying to duct-tape cats together to make a better mouse-catching machine.