When comparing the performance of computers across several generations (and often, several orders of magnitude in speed), it can sometimes be challenging to find an appropriate computational task that is scalable across a large enough range. Tasks that can take hours on a machine from the 1970s or 1980s (or earlier) can sometimes only take fractions of a second on a modern PC, skewing the results due to noncomputational overhead and quantization errors.

Fortunately, there exist several types of task that make at least limited speed comparisons possible. One such task for measuring integer-operation speed is the computation of the sums of the first 10^N prime numbers.

Prime numbers occur regularly enough to be a good benchmark, and sums of the first N primes are well-known, allowing verification of not only speed but algorithm correctness. This is a task that can both be accomplished by almost any computing device created — and yet can challenge even modern supercomputers. The task scales itself to the processor speed, even over many orders of magnitude.

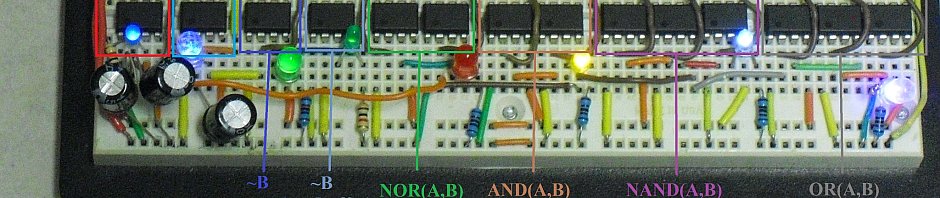

The algorithm chosen is a simple not-quite-naïve for loop, checking each odd integer for divisibility by the odd numbers up to and including its square root. More sophisticated algorithms such as using the Sieve of Eratosthenes can speed up the calculation — but the idea is to use the simplest reasonable implementation possible, so it can be run on very simple machines. This way, the computing speed of vintage devices can be compared to modern ones — sometimes with fascinating conclusions. Machine-internal timing is used where possible.

Here is the C version: Primespeed.c

Here is the FreeBasic version: Primespeed.bas

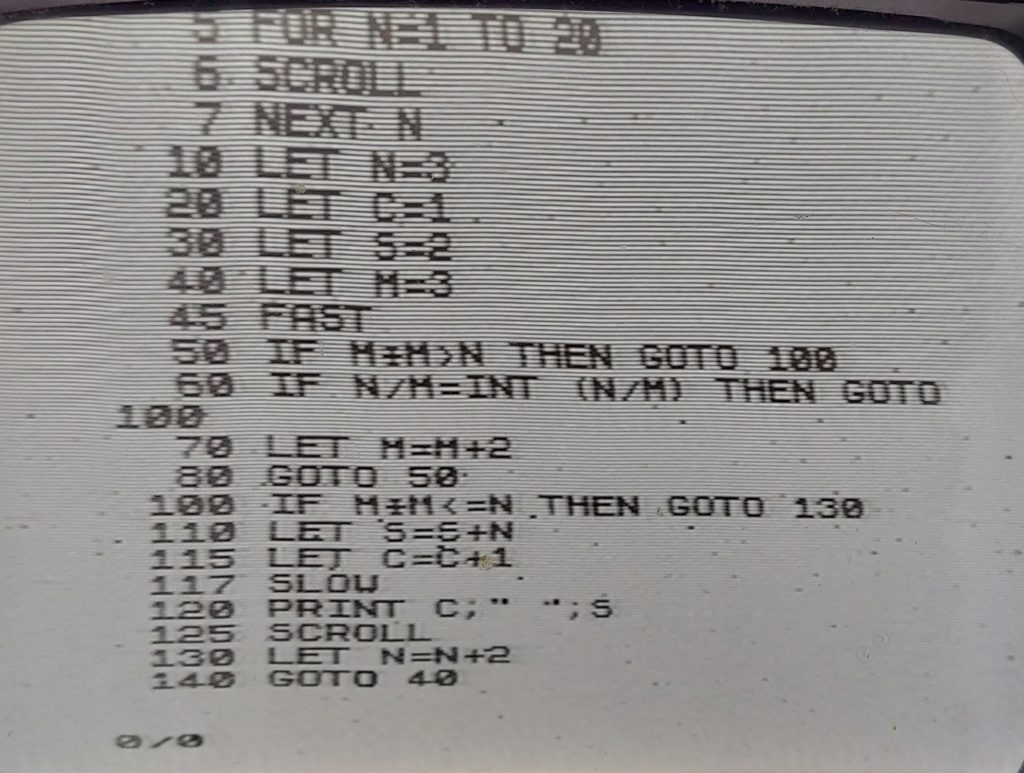

(Screenshot only for now; system still needs upload capability)

One interesting observation is that (for most setups where printing the data doesn’t take appreciable time) summing 10^(N+1) primes seems to always take about 33.3 times as long as summing only 10^N primes. This suggests a uniform way to scale the comparisons logarithmically, since each “level” (summing ten times as many primes) represents 33.3x as much computation. Scores for each machine under this scaling should be relatively consistent whether they’re calculating primes for a minute, an hour, or a week.

The Sinclair took about 175 seconds to calculate the sum of the first 100 primes, and 2526 seconds to compute the first 1,000. Assuming that each 10x increase in the number of primes computed represents a 33.3x increase in the number of computations, it’s reasonable to expect it to compute the sum of the first one million primes in about 175*(33.3^4) seconds (about 215.2 million seconds, or about 6.82 years.)

A good metric should be logarithmic for convenience. A reasonable one might be the log10 of the number of primes each machine would be expected to compute in 1000 seconds. With that, here are the results for various devices ranging from the 1980s to the present:

- Timex/Sinclair 1000: 1,000 primes in 2,526 seconds: 2.74

- TRS-80 Model 100: 10,000 primes in 3758 seconds: 3.62

- ESP32: 10,000,000 primes in 24,629.97 seconds: 6.09

- PocketChip: 1,000,000 primes in 460.38 seconds: 6.22

- Northlight (Core i7/980X): 100,000,000 primes in 12,706.8 seconds: 7.27

- Scientia (Core i9/9900): 100,000,000 primes in 9347.09 seconds: 7.36

- Samsung A71 5G phone: 100,000,000 primes in 7099.7 seconds: 7.44

Note that these are logarithmic scales; each additional point means roughly a 10x speedup.

Some interesting observations:

- The interpreted-BASIC machines are slowest, as was expected.

- WinFBE’s compiler seems to be slightly better at integer ops than gcc.

- Single-thread performance has not gotten significantly better in recent years.

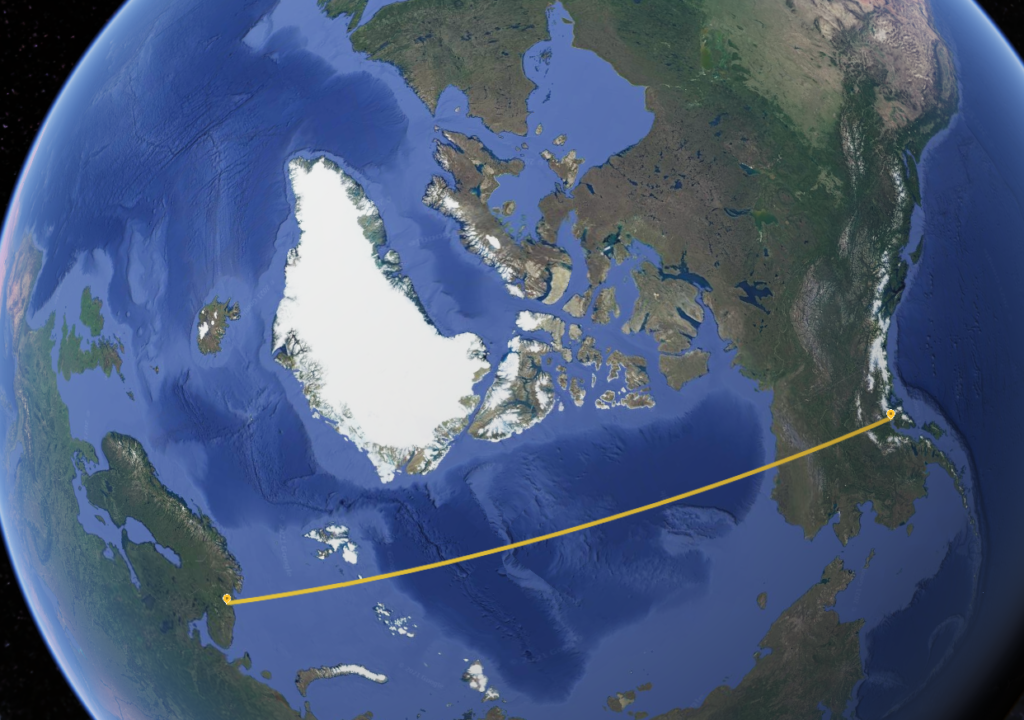

- If the slowest machine (the Sinclair) is compared to the speed of a literal snail, the fastest devices tested (desktops and a smartphone) would be about as fast as airliners.

…but most surprisingly, - My Samsung A71 5G smartphone beat everything, including the desktops, and is something like 50,000 times as fast as the Timex/Sinclair, at computing primes. That’s not surprising — but I wouldn’t have guessed it would outrun a modern Core i9(!)

Finding the first ten primes in my head took me about twelve seconds as I was going to sleep. This would result in an equivalent score of about 2.26 (but wouldn’t scale very far.)