Cars are pretty interesting, but generally not especially so from a Digital Electronics geek’s perspective. GPS navigation systems and fuel-consumption computers are cool, but for the most part, cars seem to be solidly the bailiwick of Mechanical types.

Relatively new cars, though, have a hidden secret. Every new car sold in the US since 1996 is required to implement OBD-II — a collection of protocols for the interchange of information about various automotive parameters. Information such as engine RPM, relative load (torque), vehicle speed, coolant temperature, and exhaust oxygen content can be monitored in real time. Engine trouble codes identifying problems that arise while the vehicle is being driven are also recorded — and when a problem is detected, the engine computer records the problem as well as a snapshot of the various system parameters at the time. This (in theory, at least) eliminates the all-too-familiar issue of car troubles which magically cure themselves while the vehicle is in for service. Even if a hard-to-diagnose problem is present, such as a loose electrical connection to an oxygen sensor, the EMC can note it while the car is going down the road, and store a snapshot of the conditions under which the error happened. It’s as if a mechanic’s assistant — or a Flight Engineer from the days of propliners — were always along for the ride.

All of this is good news to an automotive mechanic — but for a Digital geek, it can be described in one single word: DATA, and lots of it. Real-time engine performance data can be recorded and used for all kinds of things from learning to drive more efficiently (using real-time fuel efficiency calculations) to detecting possible problems before they cause trouble (for instance, detecting transmission slippage or changes in fuel consumption or oil pressure). All that is needed is some kind of interface to the OBD-II system, and some software to collect and interpret the data.

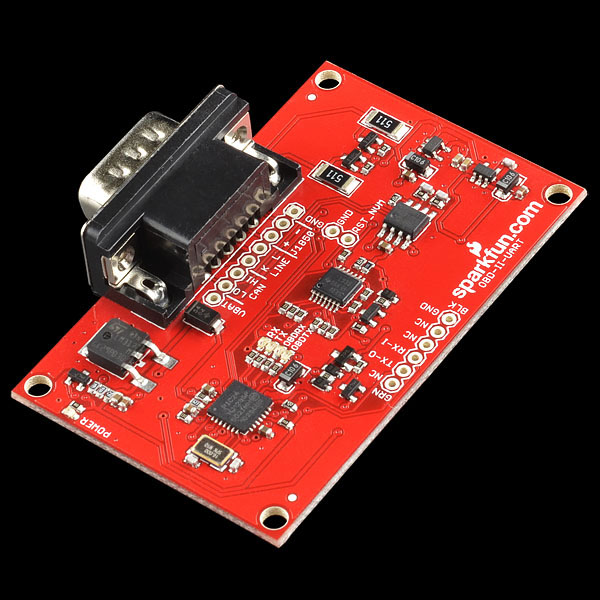

SparkFun, as usual, has come up with a nearly-turnkey solution to most of this. Their OBD-II interface board, along with the associated cable, allows semiautomated querying of the various bus protocols supported by OBD-II. Requests are translated into CAN, PWM, VPW, DCL, and various other protocols (OBD-II has more official “languages” than Switzerland.) The computer side of the interface board is much easier to work with: standard 5-volt serial TTL signaling. SparkFun even helpfully provides an FTDI cable which can translate this TTL interface into a virtual COM port on a PC.

SparkFun's OBD-II interface board.

The OBD-II connector is always located in the passenger compartment, within three feet of the driver. Here is a database showing the approximate location for many makes and models. For my Escort and my wife’s Sable, the connector was found below the driver’s side dashboard, to the left of the steering wheel column.

Once the board is connected to the OBD-II connector and to the computer, a few commands are all that is needed to start reading data. Sending a query code of 010C returns the engine speed (in quarter-RPM, encoded in hex). Sending a query of 010D returns the current vehicle speed in km/hr (also encoded in hex).

Once you’re connected, the next step is learning the language. SparkFun provides a good bit of documentation and some examples on their site, and Wikipedia also has a nice list of many of the basic PIDs and what information they return.

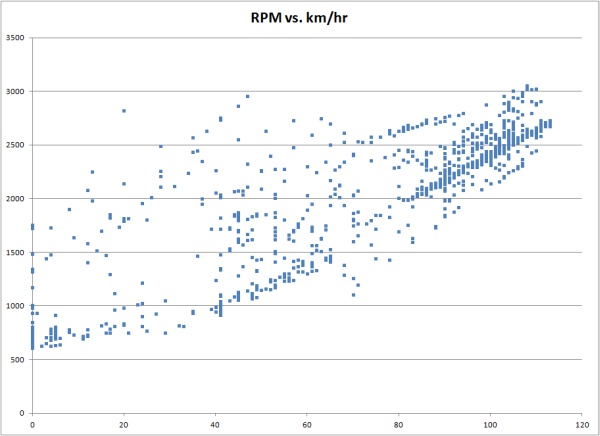

With a bit of programming, this information can be recorded, logged, and presented in nearly any format imaginable. Here, for example, is a plot of engine RPM vs. vehicle speed, for part of a recent trip I took.

The availability of the OBD-II bus not only allows the collection of data using a PC, but also provides a way to implement a custom vehicle computer system using an Arduino or similar microcontroller. (More about this, later!)