Flight Simulation is a fun hobby, and has evolved from interesting-but-not-especially-realistic vector graphic depictions of flying something that vaguely resembled a Cessna 182RG around the Chicagoland area, to impressive, raytraced simulations of just about every type of aircraft out there, often with highly accurate physical simulations backing up the aircraft performance.

in what we are assured is a Cessna 182RG. (Image: Wikipedia)

Of course, realism takes a hit when you’re interacting with these glorious models through a computer screen — and manipulating controls by clicking on them and dragging them with a mouse. If real aircrews had to do this to control their planes, they could make it work — but they would declare an emergency due to how difficult (and therefore risky) it makes things. It would be nice to have the same kind of controls for simulation that the actual aircraft use, but this has generally been a hassle, having to program each control to interact with an API such as SimConnect.

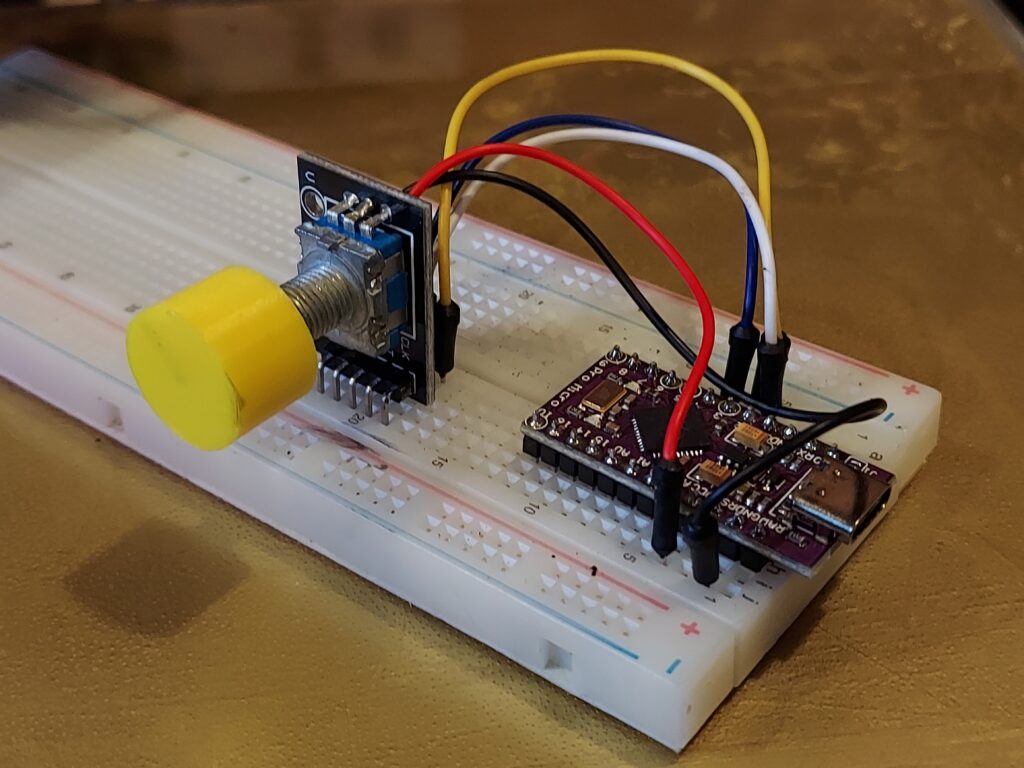

MobiFlight (freeware) makes connecting hardware inputs and outputs much simpler. Connect your controls to I/O pins on supported Arduino models, upload the MobiFlight firmware to the board, download and launch the connector software on the PC, and you’re up and running. Make a change on the physical controls, and it will be reflected in the sim.

This, plus plugging in the board via USB, is all the hardware setup needed!

As a first test, I implemented a heading bug selector, and verified that it works in the Cessna 172, Cirrus Vision Jet, and the PMDG 737-700. From here, I’m planning to start to recreate the 737 controls, panel by panel. The eventual goal is to have at least the main control panel in the correct position, so I can look over in VR and find each control where it should be, without having to see the physical device.